Reading Time: 4 minutes

After a two year hiatus due to COVID, Fujifilm’s 12th Annual Global IT Executive Summit took place last week in beautiful, warm and sunny San Diego. This year’s Summit theme was “Optimizing storage in the post-Covid, zettabyte age” where organizations have to do more with fewer resources while the value, volumes and retention periods of data continue to increase unabated. It was so good to once again interact face-to-face with members of the storage industry family including around a hundred or so customers, vendors, industry analysts, and storage industry experts during the 3 day event.

About The Summit

For those not familiar with the Summit, it is an educational conference featuring presentations from industry experts, analysts, vendors and end users about the latest trends, best practices and future developments in data management and storage. A concluding speaker panel with Q & A and peer-to-peer networking opportunities throughout the agenda truly make the Summit a unique storage industry event.

Key End User and Vendor Presentations

Similar to past Summits (we last convened in San Francisco in October of 2019) we enjoyed presentations from key end users including AWS, CERN, Meta/Facebook and Microsoft Azure. These end users are on the leading edge of innovation and in many ways are pioneering a path forward in the effective management of vast volumes of data growing exponentially every year.

From the vendor community, we were treated to the latest updates and soon to be unveiled products and solutions from Cloudian, IBM, Quantum, Spectra Logic, Twist Bioscience (DNA data storage) and Western Digital (HDD). The tape vendors shined a light on the continuing innovations in tape solutions including improvements in ease-of-use and maintenance of automated tape libraries as reviewed by Quantum. New tape applications abound from object storage on tape in support of hybrid cloud strategies as explained by Cloudian and Spectra, to the advantages of sustainable tape storage presented by IBM. It’s not a question of if, but when organizations will need to seriously address carbon emissions related to storage devices. After all: “no planet, no need for storage” quipped one attendee. Also included in the tape application discussions were the massive cold data archiving operations as presented by CERN and the hyper scale cloud service providers.

Finally from the world of tape, was a chilling, harrowing tale of a real life ransomware attack experienced by Spectra Logic and how their own tape products contributed to the safe protection of their data with the simple principal of a tape air gap.

Need for Archival Storage

We also heard about the latest updates in the progress of DNA data storage from Twist Bioscience and where the world of HDD is going from Western Digital. We are now firmly in the zettabyte age with an expected 11 zettabytes of persistent data to be stored by 2025. Just one zettabyte would require 55 million 18TB HDDs or 55 million LTO-9 tapes. As an industry we are going to need a lot of archival storage! That includes future technologies like DNA, advanced HDDs, optical discs, and of course, highly advanced modern tape solutions. Tape will continue to deliver the lowest TCO, lowest energy consumption and excellent cybersecurity. All the while tape is supported by a roadmap with increasing cartridge capacities to meet market demand as it unfolds. Certainly, the cloud service providers will leverage all of these storage media at some point as they fine tune their SLAs and prices for serving hot data to cold archival data.

Fred Moore, Horison Information Strategies

Analysts Share Future Vision

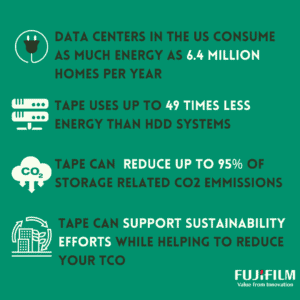

From the analyst community, we were treated to a visionary storage industry outlook from Fred Moore, president of Horison Information Strategies who shared the fact that 80% of all data quickly becomes archival and is best maintained in the lower tiers of his famed storage pyramid as an active archive or cold archive. Following Fred was important data from Brad Johns Consulting that showed the 18X sustainability advantage of eco-friendly tape systems compared to energy intensive HDDs. While we need both technologies, and they are indeed complementary, a tremendous opportunity exists for the storage industry to reduce carbon emissions by simply moving cold, inactive data from HDD to tape systems.

Rounding out the analyst presentations was Philippe Nicolas of Coldago Research with some valuable insights into end user storage requirements and preferences in both the U.S. and Europe.

Innovation from an Industry Expert

From the realm of storage industry experts, we had a compelling talk from Jay Bartlett of Cozaint. With his expertise in the video surveillance market, Jay shared how the boom in video surveillance applications is becoming unsustainable from a retention of content perspective. It will become increasingly cost prohibitive to retain high definition video surveillance footage on defacto-standard HDD storage solutions. Jay revealed a breakthrough allowing for the seamless integration of tier 2 LTO tape with a cost savings benefit of 50%! No longer will we need to rely on grainy, compromised video evidence.

Final Thoughts

The Summit wrapped up with a speaker panel moderated by IT writer and analyst, Philippe Nicolas. One big take away from this session was that while innovation is happening, it will need to continue in the future if we are to effectively store the zettabytes to come. Innovation means investment in R&D and production of new solutions, perhaps even hybrid models of existing technologies. That investment can’t come from the vendors alone and the hyper scalers will need to have some skin in the game.

In conclusion, the Summit was long overdue. The storage eco-system is a family from end users to vendors, to analysts and experts. As a family we learn from each other and help each other. That’s what families do. Fujifilm was pleased to bring the family together from around the globe under one roof, for frank and open discussion that will help solve the challenges we and our society are facing.

Next came the conversation about renewables and how Greenpeace has done a great job advocating for more use of renewables in data centers, especially the cloud hyperscalers. But from the looks of progress being made on this front, renewable sources of energy likely can’t come on line fast enough or cheaply enough, or in sufficient volume to satisfy the energy needs of the massive data center industry. While Fujifilm is a big fan of renewables (

Next came the conversation about renewables and how Greenpeace has done a great job advocating for more use of renewables in data centers, especially the cloud hyperscalers. But from the looks of progress being made on this front, renewable sources of energy likely can’t come on line fast enough or cheaply enough, or in sufficient volume to satisfy the energy needs of the massive data center industry. While Fujifilm is a big fan of renewables (

We as a society, as individuals and as commercial organizations and governments need to take action. No effort is too small, even turning off a single light switch when not needed is worthwhile. Collectively we can make a difference.

We as a society, as individuals and as commercial organizations and governments need to take action. No effort is too small, even turning off a single light switch when not needed is worthwhile. Collectively we can make a difference.