The Sustainable Preservation of Enterprise Data, a New Report by John Monroe of Furthur Market Research and Brad Johns of Brad Johns Consulting LLC

John Monroe of Furthur Market Research, a long-time storage industry expert and former Gartner analyst, together with Brad Johns, storage industry expert on TCO and energy consumption, recently published a new report entitled “The Sustainable Preservation of Enterprise Data”. This report is a follow-up to John’s most recent report, “Preservation or Deletion: Archiving and Accessing the Dataverse” March 2023, and his initial report entitled “The Escalating Challenge of Preserving Enterprise Data”, August 2022. The new report is co-sponsored by Cerabyte, Fujifilm, and IBM.

TCO and energy consumption, recently published a new report entitled “The Sustainable Preservation of Enterprise Data”. This report is a follow-up to John’s most recent report, “Preservation or Deletion: Archiving and Accessing the Dataverse” March 2023, and his initial report entitled “The Escalating Challenge of Preserving Enterprise Data”, August 2022. The new report is co-sponsored by Cerabyte, Fujifilm, and IBM.

This new and in-depth report looks at refined forecasts for enterprise storage capacity shipments through 2035 and the growing installed base of enterprise storage comprised of enterprise-grade SSD, HDD, tape and future emerging technologies (new forms of tape, ceramics, DNA, optical, silica, and others). The findings and conclusions in this report point to the fact that enterprise storage will consume more and more of the available data center power budget and that IT managers must soon proactively deploy fewer SSDs and HDDs and more tape and enterprise emerging storage technologies in the future. This will be required to be in alignment with the total availability of energy, constrained IT budgets and ecological goals. Below are some summaries and excerpts taken from the report and a link is provided to view/download the full report.

A Dataverse of Stunning Dimensions

At the end of 2022, a year in which storage demand declined in unprecedented ways, the dimensions of the active installed base of enterprise data stored on SSD, HDD, and tape media still grew to 4.8 zettabytes (or 4.8 thousand exabytes, or 4.8 million petabytes), up a staggering 53x over the 91,000 petabytes (or 91 exabytes) in 2010. Despite another year of downturn in 2023, the authors still estimate the active installed base of enterprise data will exceed a massive 40 zettabytes in 2035, up more than 475x over 2010.

Cool, Cold or Frozen Data Dominates

The problem with all of this enterprise data is that it is perceived to be too valuable now, or potentially will be too valuable in the future, to be deleted. According to the authors, at least 70%, or more probably 80% of enterprise data will become cold after 60 days and will continue to be “cool”, “cold” or “frozen,” with infrequent access times of minutes to days to weeks to years to decades, with little or no need for the performance of SSDs and HDDs, but with greatly expanding needs for Sustainability, Immutability, and Security (SIS), which SSDs and HDDs can neither cost effectively nor power efficiently fulfill.

Concern for Data Center Energy Consumption

The power demands of enterprise storage will continue to increase as a percentage of the overall data center energy budget. The report shows a shift in the percentage of the data center energy budget dedicated to storage from 17% in 2020 to 29% in 2035. According to the authors, data center managers must learn to integrate more cost-effective and power-efficient storage technologies. There are already a multitude of CO2 emission compliance regulations in place throughout the world (with much stricter regulations in Europe) and growing scarcities of total available energy for datacenters in many areas. Healthy ecosystems have become more crucial considerations in all IT purchasing decisions, and many data center managers will soon be forced—by upper-level management or by compliance regulations—to use tape and various enterprise emerging technologies as ultra-low-cost, sustainable storage alternatives. The report shows that tape and emerging enterprise emerging technologies, also referred to as the “active archive” tier by the authors, will consume 99% less energy than primary storage tiers of SSDs and HDDs.

Total Cost of Ownership Savings and a Shifting of Exabytes

The rapid growth of the dataverse creates not only energy consumption and CO2 emissions challenges but also cost challenges. The costs of managing multi-zettabytes over increasingly lengthy time periods will continue to swell, causing a steady migration of data to the active archive tier. In 2035, the authors project that the 5-year costs per terabyte for an SSD system will be 33x (up from 16x in 2020) and an HDD system will be 8x (up from 2.4x in 2020) compared to the 5-year cost per terabyte for an active archive system. Based on the 2023 CapEx and OpEx estimates in the report, for every exabyte of cold or frozen data moved from HDD to tape storage, total costs can be reduced by more than $16 million over five years. The estimated annual energy costs will also drop by almost $1 million per exabyte, and annual CO2 emissions can be reduced by almost seven kilotons. These substantial cost advantages combined with far lower energy consumption, lead the authors to believe that tape and enterprise emerging technology shipments will display consistent growth through at least 2035 and will exceed combined SSD+HDD exabyte deliveries in 2034.

In Conclusion

With the advent of new tape and enterprise emerging storage technologies, the authors have forecast that active archive shipments will expand to comprise more than 50% of the fresh enterprise zettabytes delivered in 2034 and 2035. In the cool, cold and frozen enterprise data layers—which have little or no real need for the performance of SSDs or HDDs, but have greatly expanding needs for Sustainability, Immutability, and Security—the most cost-effective and power-efficient technologies will inevitably prevail, according to the authors, because they make the greatest fiscal and ecological sense.

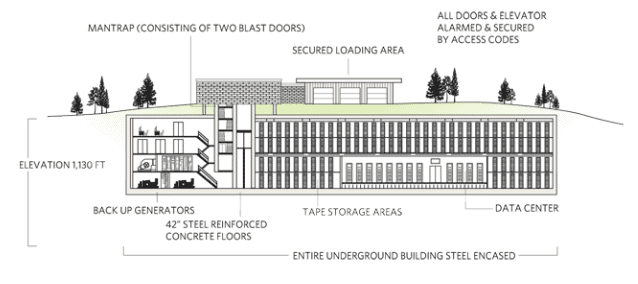

I recently had the opportunity to visit a very unique data tape vault run by

I recently had the opportunity to visit a very unique data tape vault run by

Truth be told, this was not my first visit to VRI Roxbury. I had toured the facility some 20 years earlier while working for another data tape manufacturer. And while the facility has kept up with modern innovations such as security protocols including iris scans, temperature and humidity monitoring, hi-def video surveillance, new and improved inventory management techniques, it still essentially provides the same services today that it did 20 years ago. However, given several critical market dynamics, these services are more relevant today than ever before.

Truth be told, this was not my first visit to VRI Roxbury. I had toured the facility some 20 years earlier while working for another data tape manufacturer. And while the facility has kept up with modern innovations such as security protocols including iris scans, temperature and humidity monitoring, hi-def video surveillance, new and improved inventory management techniques, it still essentially provides the same services today that it did 20 years ago. However, given several critical market dynamics, these services are more relevant today than ever before.

As both an active archive and tape evangelist, I’m excited to share how

As both an active archive and tape evangelist, I’m excited to share how