LTO-9 Coming to Market at the Right Time with the Right Features to Address the Many Challenges Facing IT Today

As recently announced by Fujifilm, LTO-9 has arrived and is available for immediate delivery. It certainly comes at a time when the IT industry is so challenged to manage rampant data growth, control costs, reduce carbon footprint and fight off cyber-attacks. LTO-9 is coming to market just in time to meet all of these challenges with the right features like capacity, low cost, energy efficiency, and cyber security.

What a Great Run for LTO

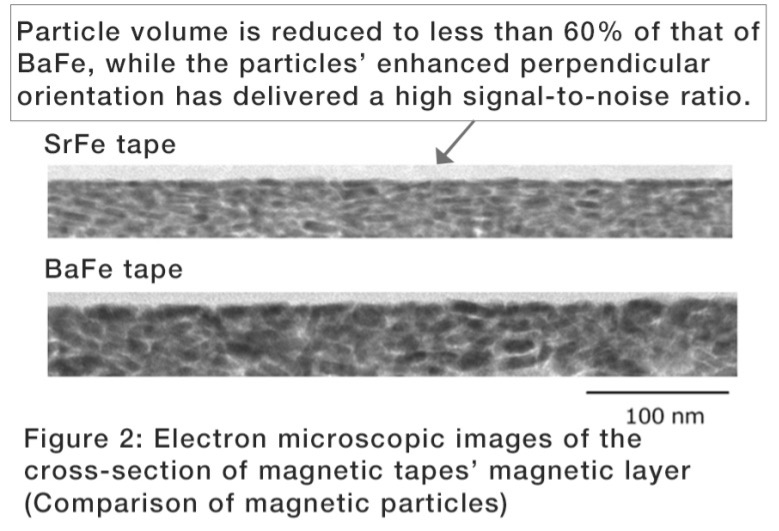

First of all, it is remarkable to look at how far LTO Ultrium technology has come since its introduction. LTO made its market debut in 2000 with the first generation LTO-1 at 100/200 GB native/compressed capacity with 384 data tracks. Transfer rate was just 20 MB native and 40 MB compressed per second. Fast forward 21 years to the availability of LTO-9 now with 18/45 TB native/ compressed capacity on 8,960 data tracks, with transfer rate increasing to 400 MB per second, 1,000 MB per second compressed! In terms of compressed capacity, that’s a 225X increase compared to LTO-1. Since 2000, Fujifilm alone has manufactured and sold over 170 million LTO tape cartridges, a pretty good run indeed.

Capacity to Absorb Bloated Data Sets

We are firmly in the zettabyte age now and it’s no secret that data is growing faster than most organizations can handle. With compound annual data growth rates of 30 to 60% for most organizations, keeping data protected for the long term is increasingly challenging. Just delete it you say? That’s not an option as the value of data is increasing rapidly thanks to the many analytics tools we now have to derive value from it. If we can derive value from that data, even older data sets, then we want to keep it indefinitely. But this data can’t economically reside on Tier 1 or Tier 2 storage. Ideally, it will move to Tier 3 tape as an archive or active archive where online access can be maintained. LTO-9 is perfect for this application thanks to its large capacity (18 TB native, 45 TB compressed) and high data transfer rate (400 MB sec native, 1,000 MB sec compressed).

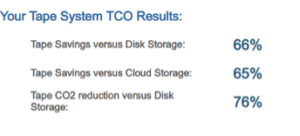

Lowest TCO to Help Control Costs

Understanding your true total cost of ownership is of vital importance today as exponential data growth continues unabated. The days of just throwing more disk at storage capacity issues without any concern for cost are long gone. In fact, studies show that IT budgets on average are growing at less than 2.0% annually yet data growth is in the range of 30% to 60%. That’s a major disconnect! When compared to disk or cloud options, automated tape systems have the lowest TCO profile even for relatively low volumes of data less than one petabyte. And for larger workloads, the TCO is even more compelling. Thanks to LTO-9’s higher capacity and fast transfer rate, the efficiency of automated tape systems will improve keeping the TCO advantage firmly on tape’s side.

Lowest Energy Profile to Reduce Carbon Footprint

Perhaps of even greater concern these days are the environmental impacts of energy-intensive IT operations and their negative effect on global warming and climate change. You may have thought 2020 was a pretty bad year, being tied for the hottest year on record with 2016. Remember the raging forest fires out West or the frequency of hurricanes and tropical storms? Well, it turns out 2021 is just as bad if not worse with the Caldor Fire and Hurricane IDA fresh in our memory.

Tape technology has a major advantage in terms of energy consumption as tape systems require no energy unless tapes are being read or written to in a tape drive. Otherwise, tapes that are idle in a library slot or vaulted offsite consume no energy. As a result, the CO2 footprint is significantly lower than always on disk systems, constantly spinning and generating heat that needs to be cooled. Studies show that tape systems consume 87% less energy and therefore produce 87% less CO2 than equivalent amounts of disk storage in the actual usage phase. More recent studies show that when you look at the total life cycle from raw materials and manufacturing to distribution, usage, and disposal, tape actually produces 95% less CO2 than disk. When you consider that 60% to 80% of data quickly gets cold with the frequency of access dropping off after just 30, 60, or 90 days, it only makes sense to move that data from expensive, energy-intensive tiers of storage to inexpensive energy-efficient tiers like tape. The energy profile of tape only improves with higher capacity generations such as LTO-9.

A Last Line of Defense Against Cybercrime

Once again, 2021 is just as bad if not worse than 2020 when it comes to cybercrime and ransomware attacks. Every webinar you attend on this subject will say something to the effect of: “it’s not a question of if; it’s a question of when you will become the next ransomware victim.” The advice from the FBI is pretty clear: “Backup your data, system images, and configurations, test your backups, and keep backups offline.”

This is where the tape air gap plays an increasingly important role. Tape cartridges have always been designed to be easily removable and portable in support of any disaster recovery scenario. Thanks to the low total cost of ownership of today’s high-capacity automated tape systems, keeping a copy of mission-critical data offline, and preferably offsite, is economically feasible – especially considering the prevalence of ransomware attacks and the associated costs of recovery, ransom payments, lost revenue, profit, and fines.

In the event of a breach, organizations can retrieve a backup copy from tape systems, verify that it is free from ransomware and effectively recover. The high capacity of LTO-9 makes this process even more efficient, with fewer pieces of media moving to and from secure offsite locations.

The Strategic Choice for a Transforming World

LTO-9 is the “strategic” choice for organizations because using tape to address long-term data growth and volume is strategic, adding disk is simply a short-term tactical measure. It’s easy to just throw more disks at the problem of data growth, but if you are being strategic about it, you invest in a long-term tape solution.

The world is “transforming” amidst the COVID pandemic as everyone has to do more with less and budgets are tight, digital transformation has accelerated, and we are now firmly in the zettabyte age which means we have more data to manage efficiently, cost-effectively, and in an environmentally friendly way. The world is also transforming as new threats like cybercrime become a fact of life, not just a rare occurrence that happens to someone else. In this respect, LTO-9 indeed comes to market at the right time with the right features to meet all of these challenges.