How Tape Technology Delivers Value in Modern Data-driven Businesses…in the Age of Zettabyte Storage

The newly released whitepaper from IT analyst firm ESG (Enterprise Strategy Group), sponsored by IBM and Fujifilm, entitled, “How Tape Technology Delivers Value in Modern Data-driven Businesses,” focuses on exciting, new advances in tape technology that are now positioning tape for a critical role in effective data protection and retention in the age of zettabyte (ZB) storage. That’s right “zettabyte storage!”

The whitepaper cites the need to store 17 ZB of persistent data by 2025. This includes “cold data” stored long-term and rarely accessed that is estimated to account for 80% of all data stored today. Just one ZB is a tremendous amount of data equal to one million petabytes that would need 55 million 18 TB hard drives or 55 million 18 TB LTO-9 tapes to store. Just like the crew in the movie Jaws needed a bigger boat, the IT industry is going to need higher capacity SSDs, HDDs, and higher density tape cartridges! On the tape front, help is on the way as demonstrated by IBM and Fujifilm in the form of a potential 580 TB capacity tape cartridge. Additional highlights from ESG’s whitepaper are below.

New Tape Technology

IBM and Fujifilm set a new areal density record of 317 Gb/sq. inch on linear magnetic tape translating to a potential cartridge capacity of 580 TB native featuring a new magnetic particle called Strontium Ferrite (SrFe) with the ability to deliver capacities that extend well beyond disk, LTO, and enterprise tape roadmaps. SrFe magnetic particles are 60% smaller than the current defacto standard Barium Ferrite magnetic particles yet exhibit even better magnetic signal strength and archival life. On the hardware front, the IBM team has developed tape head enhancements and servo technologies to leverage even narrower data tracks to contribute to the increase in capacity.

The Case for Tape at Hyperscalers and Others

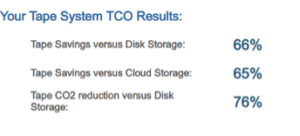

Hyperscale data centers are major new consumers of tape technologies due to their need to manage massive data volumes while controlling costs. Tape is allowing hyperscalers including cloud service providers to achieve business objectives by providing data protection for critical assets, archival capabilities, easy capacity scaling, the lowest TCO, high reliability, fast throughput, low power consumption, and air gap protection. But tape also makes sense for small to large enterprise data centers facing the same data growth challenges including the need to scale their environments while keeping their costs down.

Data Protection, Archive, Resiliency, Intelligent Data Management

According to an ESG survey revealed in the whitepaper, tape users identified reliability, cybersecurity, long archival life, low cost, efficiency, flexibility, and capacity as top attributes in tape usage today and favor tape for its long-term value. Data is growing relentlessly with longer retention periods as the value of data is increasing thanks to the ability to apply advanced analytics to derive a competitive advantage. Data is often kept for longer periods to meet compliance, regulatory, and for corporate governance reasons. Tape is also playing a role in cybercrime prevention with WORM, encryption, and air gap capabilities. Intelligent data management software, typical in today’s active archive environments, automatically moves data from expensive, energy-intensive tiers of storage to more economical and energy-efficient tiers based on user-defined policies.

ESG concludes that tape is the strategic answer to the many challenges facing data storage managers including the growing amount of data as well as TCO, cybersecurity, scalability, reliability, energy efficiency, and more. IBM and Fujifilm’s technology demonstration ensures the continuing role of tape as data requirements grow in the future and higher capacity media is required for cost control with the benefit of CO2 reductions among others. Tape is a powerful solution for organizations that adopt it now!

To read the full ESG whitepaper, click here.