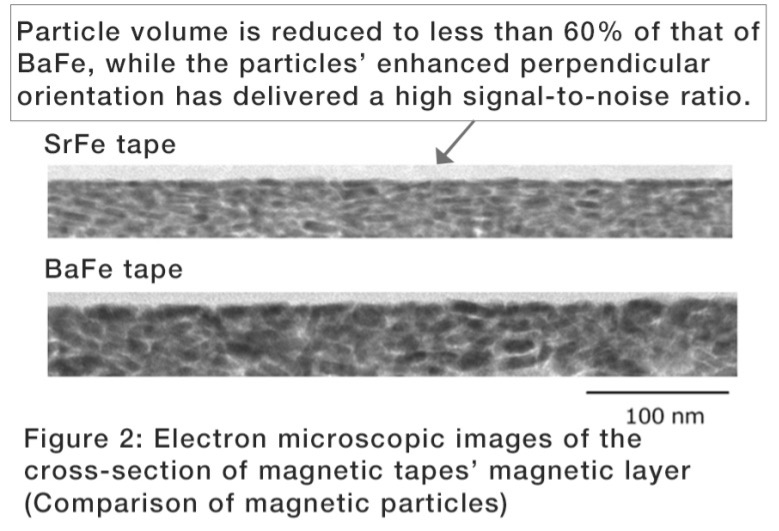

In mid-December 2020, Fujifilm issued a press release to announce that, together with IBM Research, they had successfully achieved a record areal density of 317 Gbpsi (billion bits per square inch) on next-generation magnetic tape coated with next-generation Strontium Ferrite (SrFe) magnetic particles. This areal density achievement would yield an amazing native storage capacity of 580TB on a standard-sized data cartridge. That’s almost 50 times more capacity than what we have now with an LTO-8 tape based on Barium Ferrite (BaFe) at 12TB native.

Shortly after the news came out, I was on a call with a member of our sales team discussing the announcement and he asked me when the 580TB cartridge would be available and if there was any pricing information available yet? He was also curious about transfer speed performance. I had to admit that those details are still TBD, so he asked me “what are the 3 big takeaways” from the release? So let’s dive into what those takeaways are.

Tape has no fundamental technology roadblocks

To understand the magnitude of tape areal density being able to reach 317 Gbpsi, we have to understand just how small that is in comparison to HDD technology. Current HDD areal density is already at or above 1,000 Gbpsi while achieving 16TB to 20TB per drive on as many as nine disk platters. This level of areal density is approaching what is known as the “superparamagnetic limitation,” where the magnetic particle is so small that it starts to flip back and forth between positive and negative charge. Not ideal for long-term data preservation.

So to address this, HDD manufacturers have employed things like helium-filled drives to allow for closer spacing between disk platters that allow for more space for more platters, and therefore more capacity. HDD manufacturers are also increasing capacity with new techniques for recording involving heat (HAMR) or microwaves (MAMR) and other techniques. As a result HDD capacities are expected to reach up to 50TB within the next five years or so. The reason tape can potentially reach dramatically higher capacities has to do with the fact that a tape cartridge contains over 1,000 meters of half-inch-wide tape, and, therefore, has far greater surface area than a stack of even eight or nine 3.5-inch disk platters.

But let’s also look at track density in addition to areal density. Think about the diameter of a single strand of human hair which is typically 100 microns wide. If a single data track on HDD is 50 nanometers wide, you are looking at 2,000 data tracks for HDD on the equivalent width of a single strand of human hair! For tape, with a track width of approximately 1,200 nanometers, you are looking at just 84 data tracks. But this is actually a positive for tape technology because it shows that tape has a lot of headroom in both areal density and track density, and that will lead to higher capacities and help to maintain a low TCO for tape.

But let me make it clear that this is not about HDD vs. tape. We are now in the zettabyte age having shipped just over an impressive one zettabyte (1,000 exabytes) of new storage capacity into the global market in 2019 of all media types. According to IDC, that number will balloon to a staggering 7.5 ZB by 2025. We will need a lot of HDDs and a lot of tape (and flash for that matter) to store 7.5 ZB!

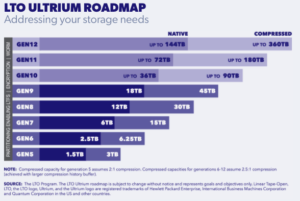

Tape technology will be able to fulfill tape format roadmaps

While Gen 12 at 144TB native capacity seems pretty impressive, it should be easily achievable given the SrFe announcement of the ability to hit 580TB. One might even feel safe projecting that the LTO roadmap could at some point be extended beyond Gen 12. That’s important for the long-term strategic planning involved in architecting a long-term archive. Some data retention requirements can be as long as 100 years. We know that tape drives are good for 7 to 10 years and that libraries can stick around for decades with component upgrades and expansions. Given the need for long-term planning and making a wise investment in the library infrastructure, it’s good to know that tape will allow for future migrations — and hardware investments made today are a good bet.

Tape will remain a strategic component for long-term data storage

The final takeaway has to do with tape’s role going forward. In this rapidly expanding zettabyte age, organizations need to optimize their storage infrastructure to take advantage of flash, HDD and tape, even DNA eventually. There is a right place and time in the data lifecycle for all of these storage mediums.

What is needed is intelligent data management solutions that classify data and move it automatically by user-defined policy from expensive tiers of storage to economy tiers of storage. It’s all about tiering, moving data from short-term expensive tiers of storage to long-term economy tiers of storage. An active archive implementation is a great example of this.

As we head into the post-COVID-19 digital economy, cost containment of rapidly growing unstructured data will be critical. Tiering will be mandatory based on the following factors:

So to wrap up the 3 big takeaways of 580TB tape:

It’s all about getting the right data, in the right place at the right time!